Archives

- Home

- Blog

- Posts

- ReimagineReview news

- Introducing PReF: Preprint Review Features

Introducing PReF: Preprint Review Features

Post by Victoria Yan, Iratxe Puebla, and Jessica Polka

Preprint reviews hold the potential to build trust in preprints and drive innovation in peer review. However, the variety of platforms available to contribute comments and reviews on preprints means that it can be difficult for readers to gain a clear picture of the process that led to the reviews linked to a particular preprint.

To address this, ASAPbio organized a working group to develop a set of features that could describe preprint review processes in a way that is simple to implement. We are proud to share Preprint Review Features (PReF) in an OSF Preprint. PReF consists of 8 key-value pairs, describing the key elements of preprint review. The white paper includes detailed definitions for each feature, an implementation guide, and an overview of how the characteristics of active preprint review projects map to PReF. We also developed a set of graphic icons (in blogpost feature image) that we encourage the preprint review community to reuse alongside PReF.

While the Peer Review Terminology developed by the STM working group and the Open Peer Review taxonomy provided useful background for our discussions, they were designed with a focus on journal-based peer review, and do not capture all the possible elements that can be part of preprint review. We acknowledge that there are nuances and different views as to what constitutes “peer review,” “feedback,” and “commenting;” rather than create strict definitions, our aim was to parse out important aspects of the process involved in any form of review on preprints, and to do so in a format that could be used by platforms that host, coordinate, or aggregate such activities. Therefore, we are glad to see that PReF is already implemented on ReimagineReview review aggregators like Early Evidence Base and Sciety. We hope that our efforts in the development and adoption of PReF will promote better visibility and discoverability of preprint review.

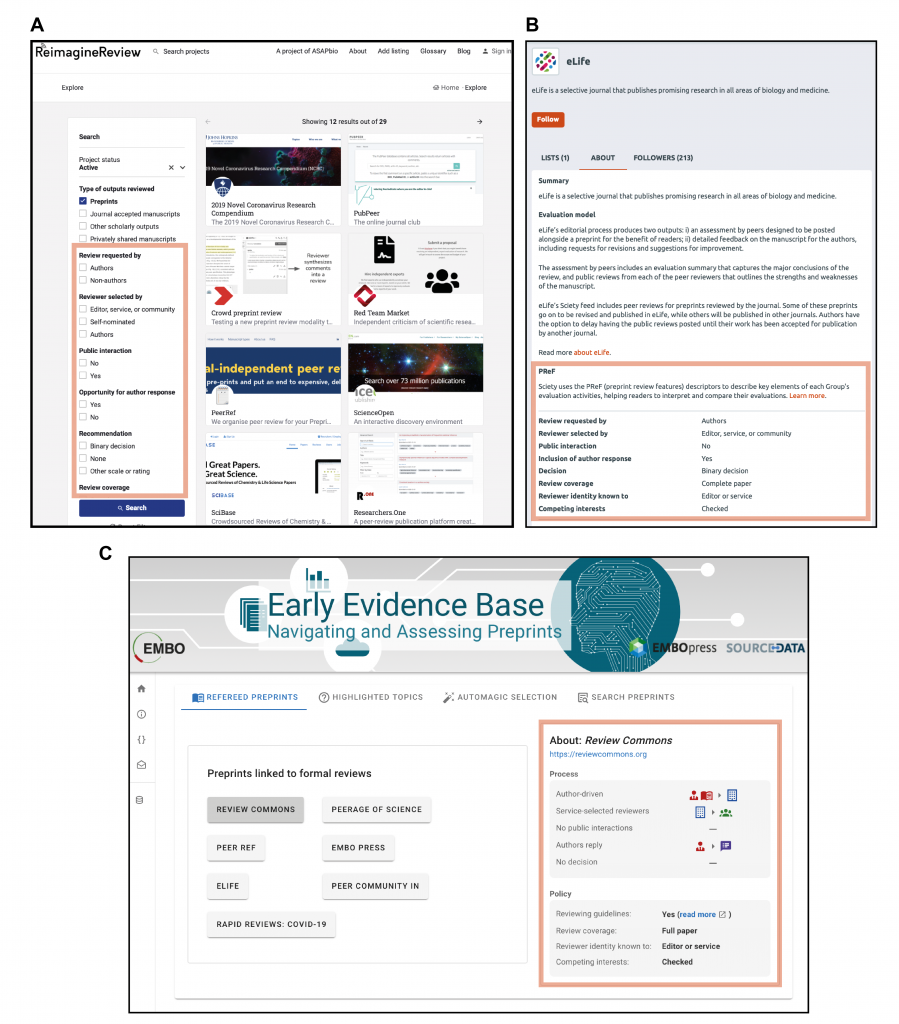

Figure 2. A. PReF implementation on ReimagineReview (https://reimaginereview.asapbio.org) demonstrating search by specific fields and combinations. B. PReF implementation on Sciety (https://sciety.org).

C. PReF implementation on Early Evidence Base (https://eeb.embo.org). Red boxes highlight where PReF is used on all three projects.

On ReimagineReview, PReF can be used as a search filter for preprint review services. For example, you can select “Active” projects with “Preprints” as the type of output reviewed, using PReF as filters, you can identify preprint review services where the Authors request review and Author response is included.

On Early Evidence Base, you can find preprints linked to preprint review with a description of the review service using PReF. Early Evidence Base aggregates referred preprints from Review Commons, Peerage of Science, PeerRef, EMBO Press, eLife, Peer Community In, and Rapid Reviews COVID19.

Sciety is a platform where researchers and groups can curate and evaluate peer reviewed preprints. Sciety uses PReF to describe the review processes of Sciety Groups.

Continued tracking of preprint review trends

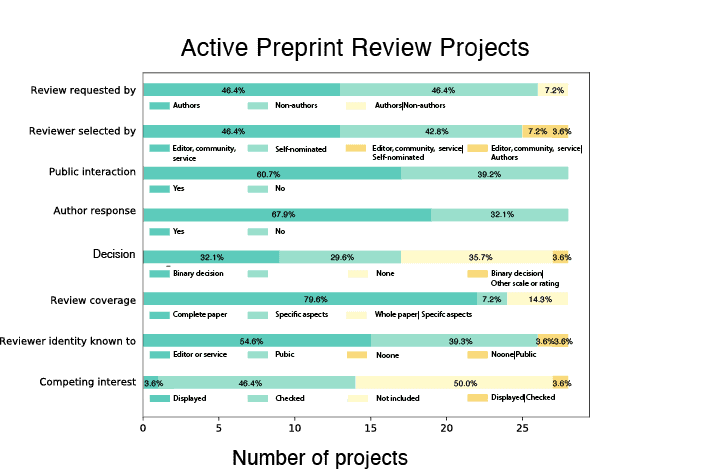

We provide a snapshot of PReF fields across projects listed in ReimagineReview as of December 2021. We found that that services that are initiated by authors tend to exhibit a different profile across other PReF fields than those initiated by commenters or other review providers.

Please see the White paper to read about the current landscape.

Figure 3. Active preprint review projects in ReImagineReview and their distribution in each of the PReF fields.

We encourage the continued tracking of preprint review projects in the future to identify emerging trends. ReimagineReview data will be available on Zenodo quarterly. Please find the data on preprint review projects with the PReF fields here, and the code used to generate the preliminary analysis is linked here.

Evolving terms for an evolving field

Since PReF is one of the first attempts to describe the processes behind preprint review, we expect continued innovation in the preprint review field, thus potential improvements to PReF. Regardless of the direction of evolution, we believe clear and consistent descriptions of preprint review processes will foster transparency. We look forward to continued conversations around the features behind peer review processes across a broad range of research outputs including preprints.

Working group members (listed alphabetically)

Michele Avissar-Whiting (Research Square)

Philip N. Cohen (SocArxiv)

Phil Hurst (Royal Society)

Thomas Lemberger (EMBO/EEB/Review Commons)

Gary McDowell (Lightoller/Doc Maps)

Damian Pattinson (eLife)

Jessica Polka (ASAPbio)

Iratxe Puebla (ASAPbio)

Tony Ross-Hellauer (TU Graz/Doc Maps)

Richard Sever (Cold Spring Harbor Laboratory and bioRxiv/medRxiv)

Kathleen Shearer (COAR Notify)

Gabe Stein (KFG/Doc Maps)

Clare Stone (SSRN)

Victoria T. Yan (ASAPbio, presently EMBL)

Huge thanks to everyone in the Working Group. This work was the result of many stimulating discussions with a very diverse group.

We are glad to see PReF in use. To provide feedback on our work, or if you have questions about implementing PReF, please leave a comment here on this blogpost, on the white paper, or email Victoria @ victoria.yan@embl.de.