Archives

- Home

- Blog

- Posts

- ReimagineReview news

- Community call recap : recognizing and combating bias with innovative peer review

Community call recap : recognizing and combating bias with innovative peer review

- ReimagineReview news

-

Sep 04

- Share post

Bias in publishing is a serious issue, as even small advantages in acceptance rates can have large effects when propagated forward to grants, jobs, and awards. Since traditional journal peer review happens mostly in a black box, it’s hard to understand the extent of the problem, let alone devise solutions to address it.

As leaders of experimental peer review trials, platforms, and services work to reimagine the peer review workflow, they can also innovate in the reduction of bias. To start a conversation about these questions, we hosted our first ReimagineReview community call on August 16th. Here’s a summary of some of the highlights.

Reviewer gender and nationality can impact editorial decisions

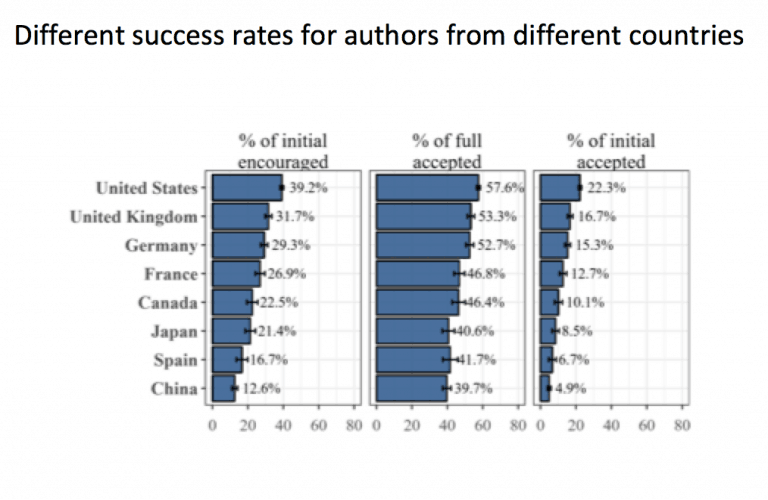

Our first speaker, Dr. Jennifer Raymond (Professor of Neurobiology at Stanford University and eLife Reviewing Editor) discussed key findings from her study of disparities in acceptance rates at eLife. This study showed that male corresponding authors had a 13% advantage in overall acceptance over female authors. The disparities in success rates were even greater between authors from different countries. Jennifer emphasized that in addition to implicit bias, factors such as domestic responsibilities, funding, mentoring and ‘opting out’ of competition could affect success rates in publishing.

To better isolate the effect of bias, Jennifer’s team asked if the gender of the reviewers correlated to the outcome of review. If reviewers are unbiased, they would provide consistent evaluations regardless of their own demographic profiles. In contrast, the results showed that reviewers have a preference for authors of the same gender and nationality. Due to the higher numbers of male reviewers and authors from US and Europe, the effects of homophily are more likely to benefit authors who match the better-represented demographics

Favoritism works across long distances in coauthor networks

Our second speaker, Dr. Misha Teplistkiy (Assistant Professor at the School of Information at the University of Michigan) discussed recent findings from his work based on data provided by PLOS ONE. Using co-authorship distance as a measure for intellectual closeness, his group found that closer that reviewer is to the author, the lower the likeliness of rejection. This correlation persists even for co-authorship distance greater than two (ie, when authors and reviewers have never been coauthors), which by conventional standards should have little to no conflict of interest. Thus intellectual closeness may result in favourable outcomes, potentially due to agreement in validity shared by the community, beyond nepotism.

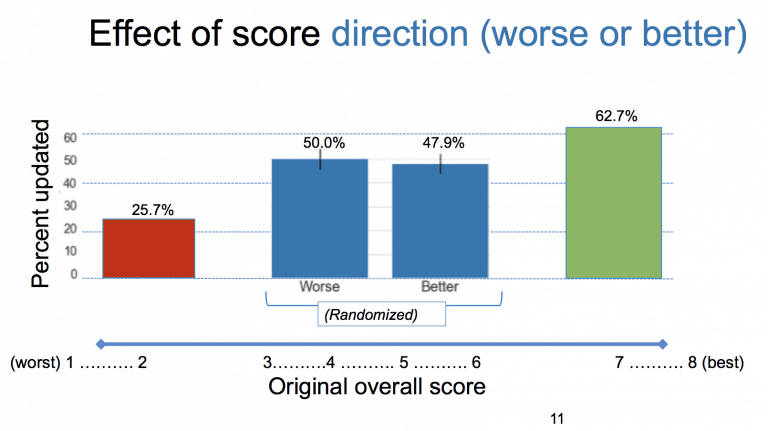

Next Misha highlighted findings from his recent study on biases in committees, using data from a research proposal competition review at Harvard Medical School. Reviewers evaluated proposals as usual, then received anonymous artificial scores. The reviewers were informed that these were “scores from fellow reviewers,” then were given a chance to update their original score. In these committees, bad scores were much less likely to be changed than favorable scores – bad scores were “sticky.” Furthermore, highly cited scientists updated their scores less than others; men updated 13% less than women. The percentage that updated correlated with the gender composition of the subfield. Women were more likely to update their scores in a female under-represented subfield. Thus, opinions from underrepresented demographics are more likely to be discounted.

You can watch the full recordings of the talks here.

Practical ideas

After the talks, we launched into an unrecorded discussion from which emerged a number of ideas for practical interventions that can reduce bias in the peer review process.

- Remind reviewers of the potential for bias when soliciting reviews. Bahar Mehmani, Reviewer Experience Lead at Elsevier, reported that Elsevier is working towards raising awareness about unconscious bias. One active strategy is to inform reviewers in their review invitation letters.

- Invite more diverse reviewers. Theo Bloom, Executive Editor of The BMJ, explained that the journal had been inviting more female reviewers. interventions may rely on broadening the pool of reviewers. Daniela Saderi, a co-founder of PREreview, mentioned that her initiative seeks to train early career researchers as new peer reviewers.

- Remind authors to recommend diverse reviewers. When submitting a paper, many journals ask authors to recommend suitable reviewers. Stephen Floor, an Assistant Professor at UCSF, suggested that this communication could be accompanied by a request to select reviewers of varied genders and from various geographical locations.

- Simply pointing out that bias exists. Jennifer Raymond called attention to watchdog groups such as BiaswatchNeuro’s Journal Watch. A few months after they started tracking journals in Jan 2018,, which also coincided in time with the posting of this study by Shen et al on bioRxiv in March 2018, the representation of women as authors in certain journals seemed to trend upward. Despite a backslide by end of 2018, this raises hope for the impact of transparency on public accountability.

Next steps

The studies discussed in this call were in many cases only possibly because journals were willing to disclose otherwise hidden information about their editorial processes. Our next community call—during Peer Review Week, on Friday, September 20—will further explore the theme of transparency as it relates to quality in peer review. Read more and register here!

Victoria Yan, ReimagineReview Coordinator

victoria.yan@asapbio.org

Header photo by Sarah Kufeß on Unsplash